Strict rules will apply to systems called high-risk artificial intelligence, as suggested by the BBC and the European Commission, that will also affect the algorithms used by police and recruitment.

It is inconspicuous and full of holes

Experts spoken to by the BBC say the rules are vague and contain loopholes. For example, the draft does not address the military use of artificial intelligence or the systems used by public authorities to “protect public safety.”

The proposed list of MI systems banned under the draft includes:

- MI systems of general surveillance;

- MI systems used for social assessment;

- MI systems that exploit information or “predictions” and use them against a person or group of people to take advantage of their weaknesses.

European policy analyst Daniel Lovre tweeted that these definitions are very general.

How can we determine what is harmful to someone? Who can appreciate that?

He wrote in a political tweet.

According to Louvre, A.

Artificial intelligence is high risk

Member states should implement greater oversight to control these systems, including the need to designate evaluation bodies to test, validate, and verify these systems.

Moreover, any company that develops or fails to provide adequate information about prohibited services may face fines of up to 4 percent of its global revenue – similar to fines for violations of the General Data Protection Regulation (GDPR).

According to Leufer, high-risk examples of MI include:

- The systems that are given priority in transmitting regulations relating to emergency services;

- Regulations governing access to educational institutions or the allocation of persons;

- Workforce selection algorithms;

- Credit rating algorithms;

- Algorithms used to assess individuals’ risks.

- Crime Prediction Algorithms.

Louver also added that the proposals “should be extended to include all artificial intelligence systems in the public sector, regardless of the level of risk identified,” noting that:

This is because people are generally unable to decide whether to interact with an AI system in the public sector.

In addition to requiring new AI systems to be under human supervision, the organization is also proposing to integrate so-called “lock switch” into high-risk AI systems. It can actually be an off button or another way to shut down the system immediately if needed.

AI manufacturers are placing so strong a focus on these proposals that a fundamental change in MI design will be required.

Says Herbert Saniker, attorney at Clifford Chance law firm in London.

‘Stop being manipulative’

Meanwhile, Michael Fell, who studies digital rights and regulation at University College London, has highlighted a clause forcing organizations to indicate on notice when using deep fake technology, a particularly controversial use of MI to create fake or real people to manipulate people’s photos and clips the video.

Phil also said that the legislation is in the first place

They have targeted IT companies and consultants who often sell MI technology to schools, hospitals, police and employers for “totally meaningless use.”

But he added that tech companies that used MI to “manipulate users” also needed to change their practices.

The European Commission performs the rope dance

With this draft legislation, the European Commission is engaging in a very dangerous rope dance, as it must, on the one hand, ensure that AI is indeed a “tool” used “only for human well-being and well-being”, but at the same time.

However, it does not limit the competitiveness of the European Union against technological innovations in the United States and China.

Additionally, the draft recognized that MI is indeed an integral part of many aspects of our lives.

Not-for-profit European legal center, which also operates in Hungary and contributes to the European CommissionWhite papersHe also mentioned that there are “a lot of ambiguities and loopholes in the bill.”

The European Union’s approach to the binary definition of high and low risk is at best neglectful, and at worst risky due to the lack of context and nuances in the complex AI ecosystem that already exists today.

First, the committee must consider the risks of MI regimes within a legal framework – as risks to human rights, the rule of law, and democracy.

Second, the committee should reject the overly simplistic over-risk structure and consider a “level-based approach” to MI risk assessment.

We can read their analysis.

These numerous discrepancies also indicate that details will be refined before the bill is officially announced next week. Implementing mentoring is a process that will take years.

(BBC)

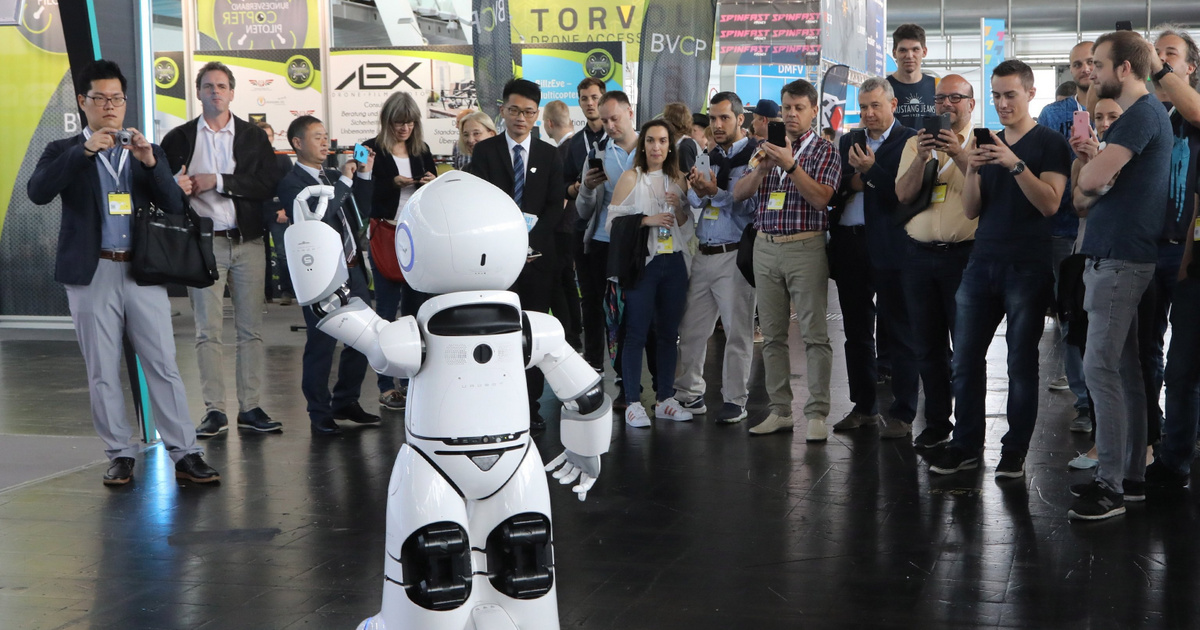

(Cover Photo: Visitors will see a robot dancing at Europe’s largest festival of digital business and innovation, CeBIT in Hanover on June 12, 2018. Photo: Focke Strangmann / MTI / EPA)

![Does the Nintendo Switch 2 not even reach Steam Deck's performance? [VIDEO]](https://thegeek.hu/wp-content/uploads/sites/2/2023/06/thegeek-nintendo-switch-2-unofficial.jpg)